The recent challenge mounted on DPDPA 2023 in the Supreme Court by a few PIL advocates relies heavily on the argument that Section 44(3) of the Act which amends Section 8(1)(j) of RTI Act 2005 fails the “proportionality test” that the need to protect “Privacy” restricts the “need to share information in public interest”.

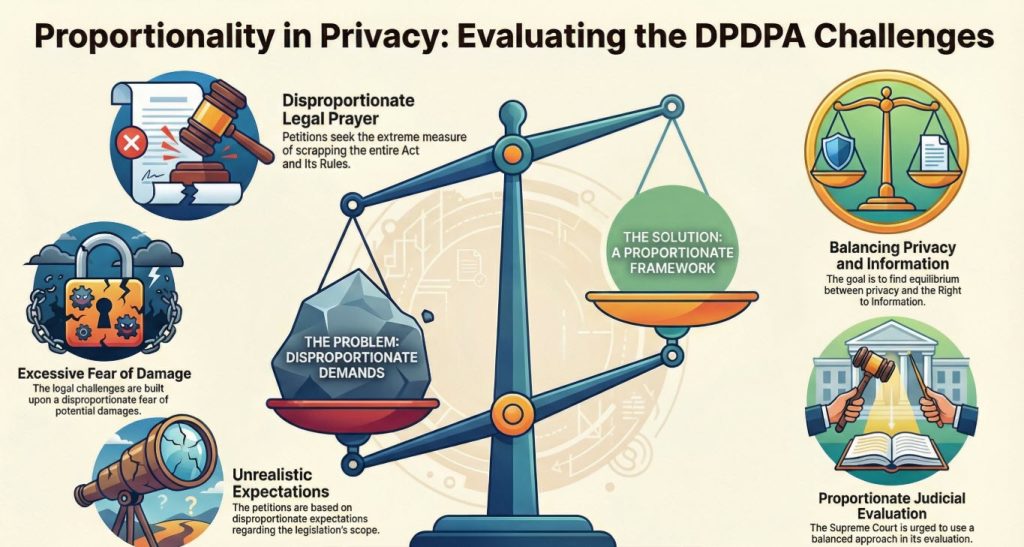

However the petitions cumulatively pray that the entire DPDPA 2023 be scrapped and Entire DPDPA Rules 2023 be scrapped.

Where is proportionality in this prayer?

Had the petitioners come with a fair request, petitioners would have asked for a Reading down of Section 8(1)(j) of RTI Act read with Section 44(3) of DPDPA 2023.

The prayers leading to scrapping of the Act and the Rules is therefore “disproportionate” to the requirement even as suggested by the petitioners.

The fears of “Surveillance Regime” and “Blanket Ban on release of information required for public good” is a “Disproportionate Speculation” of the prediction of a catostrophe not supported by any valid reasons.

The expectation that the Parliament that had created the law under Section 8(1)(j) when there was no DPDPA 2023 should not review and revise the provision when a new law comes in is a “Disproportionate Expectation” that law makers do not have the right to make course corrections to the law.

Hence the petitions and the prayer constitue disproportionate speculation of fear disproportionate expectation and disproportionate prayer”.

We trust that the Supreme Court first recognizes that the petitoners have not come with a clean hand and are seeking a disproportionae solution to an imaginary problem.

We shall demonstrate in the coming articles of how there can be acceptable solutions that will meet reasonable speculation and reasonable fear of misuse.

Naavi