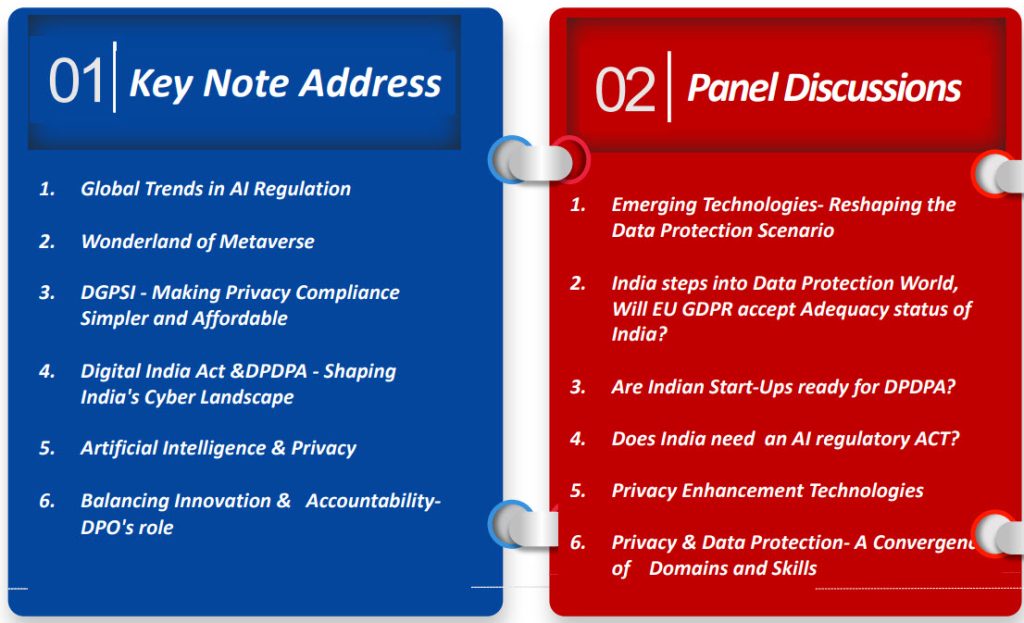

World over discussions are going on regulating Artificial Intelligence. There are some persons like Elon Musk who have endorsed the urgent need to regulate AI while some are still arguing that this is not the time to introduce restrictions on AI and curb innovations.

It is important for us to realise that it may be already too late for regulations and any further delay would only be hazardous to the human race.

Some of the recent developments in AI include developments of large language models (LLM) which have the power to “hallucinate”. Hallucination in this context is expression of creativity which enables the LLM to develop a poem or a literary work which is fiction.

However, it is this ability to hallucinate which can create “Rogue” responses and render LLMs unfit to be relied upon.

However there are innovators who consider that it is time to appoint a humanoid robot as the CEO of a Company, render “Judgements” to introduce more objectivity and reduce corruption in judiciary etc.

In this context some argue that “AI algorithm” which is also the brain behind a humanoid robot like Sophia or Mika should be considered as a “Juridical Person”. In fact “Sophia” is reported to have been already granted citizenship of a country (Saudi Arabia) which essentially means that it is already a juridical person. What remains is the formalization of this concept in international circles.

I am not sure if Sophia holds a Saudi Arabian passport today, but in June 2022, Sophia did visit India and attended a Tech fest in the College of Engineering in Thiruvananthapuram. We donot know if she travelled as a personality or a technical luggage with a human fellow traveller.

If Sophia had been considered a juridical person, she ought to have applied for a “VISA” and we would have known how the Indian Government determined her legal status. If she had been considered as just a technology instrument then the Customs should have taken some documentation to waive off custom duty or value her for custom duty and refund it if she returned in the same status.

I would invite organizers of Tech-Fest, the customs authorities in Thiruvanathapuram and the VISA office in MFA to clarify how they handled this situation since it will be considered as a precedence in the law of AI.

At this juncture I am reminded of an article in Deccan Herald today about a few researchers at IIITB who have published a paper on a robotic model that they say understands and interacts with humans based on emotions. This ability for AI to go sentient is the threshold where AGI transforms into ASI and when the argument for consideration of AI as a juridical entity becomes stronger.

While there can be an academic debate on the issue of whether AI or a humanoid robot with AI should be considered as a juridical entity, this also gives raise to a though if it is also a time to consider human brain as a computer under ITA 2000 and Neuro data considered as equivalent to binary data.

The issue of granting Juridical status to an AI can be considered as similar to a “Minor” attaining “Adulthood” . Just as a “Minor” is represented by a “Guardian”, an AI before going sentient is considered as the responsibility of the developer (under Section 11 of ITA 2000). However on attaining the sentient capability the AI may claim for independent adult status which after an assurance certificate and testing process can be approved by an authority. I propose the introduction of such a process as part of the AI regulation.

Some extended philosophical thoughts

While we try to provide legal recognition to AI as a juridical person, in order to maintain the state of equality between the science of binary bits that drive a humanoid robot and the neuron activity in a human brain, a debate is due on whether “Neuro Data” can be considered as “Binary” and law of ITA 2000 be applied to the “Human Brain”.

In this concept, brain would be considered as an asset of a “Human Soul” . This would be just like the hands and legs are part of the assets of the human soul and would be similar to the computer peripherals attached to a computer brain.

The recognition of a human entity would in such case would be in reference to the “Consciousness” which is different from the body as well as the brain. The human identity would then be linked to the soul rather than the body.

This is an area where the Indian “Philosophy” which distinguishes the body, the mind and the consciousness can find some common ground for discussion with a computer hardware, software and the AI.

If “Neuro Data” is recognized as “Electronic Data” under ITA 2000, “Human Brain” becomes a “Computer” under ITA 2000 and judiciary should recognize “Brain Computer Interfaces” as tools to access computer and recognize the need for “Neuro Rights” as part of “current Privacy rights.

I am not sure if this thought is to be considered as a degeneration of the value of human intellect.

But if it is so, it is also an argument against granting juridical status to AI since we cannot legally equate a computer algorithm to the higher consciousness of a human being.

Alternatively, the recognition of AI under law should be as an entity with a different perspective than a human entity with necessary restrictions which has to be incorporated in the AI regulations. Perhaps the “Attribution” and responsibility for actions of an AI should continue to be with a master who should be a human being.

At this point of time, this thought may look crazy, but behind this lies Naavi’s theory of Neuro Rights and equivalence of neuro data and binary data under law. Watch out for more on this topic.

Naavi