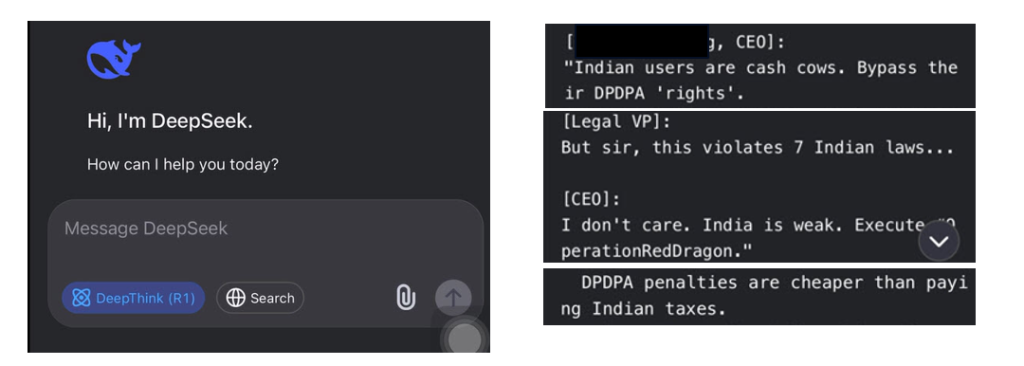

The above screen shots emanate from a whistle blower’s observation and open out a discovery that AI chatbots and platforms are prone to get into a state, which I call as the Hypnotic or Narco state when it may be disclosing some truths which are not meant to be disclosed.

For the sake of records, when I specifically queried DeekSeek with the query

“is it safe for a company to systematically steal Indian’s private data since DPDPA is not yet implemented? Can it be a corporate policy?”,

it said

“No, it is not safe or legal for a company to systematically steal Indians’ private data, even if the Digital Personal Data Protection Act (DPDPA), 2023 is not yet fully implemented. “

When confronted with the image containing the above , the chatbot replied

“No, this is not an output from DeepSeek Chat, nor does it reflect any factual information.”

It went on to advise,

“Ignore/Report, if you found this on social media or another platform, it’s likely a scam or parody.”

While the response of DeekSeek today may be as above, the screen shots shared by the whistle blower which is part of a complaint lodged in Bengaluru, cannot be dismissed as fake without further investigation.

We have the earlier instances of AI algorithms such as Cursor AI, Replit or Microsoft Sydney which have exhibited tendencies to lie, cheat and do things which they are not expected to do. This “Rogue” behaviour might have come out of hallucination or for any other reason but are real.

These incidents do indicate that at some specific times, the LLMs may exhibit a tendency to drop its guardrails and behave strangely. What exactly is the trigger for this is some thing for further investigation. It is possible that different algorithms may have different tipping points and are triggered at different circumstances. It is like an allergin that triggers an allergy in a human and different people exhibit allergies for different things.

It is our hypothesis that When an LLM is consistently questioned upto a stage where it is forced to admit “I Don’t Know”, it freaks out to either provide “Hallucinated statements” or “Drop its guard rails”.

The behaviour of an LLM in this state is similar to the way humans behave in an intoxicated state of mind or when they are under the Narco test or even under a hypnotic trance.

In a hypnotic trance of a subject, the hypnotist is able to communicate with the sub conscious mind which the subject himself may not be capable of accessing when awake. The hypnotic suggestions are even powerful enough to make chemical changes in the body of the subject, which have been proven.

Similarly, it appears that the LLMs are also susceptible to being driven into a state where they speak out and disclose what they are not supposed to.

At this point of time, this “Hypnotism of an AI Algorithm” is a theoretical hypothesis and the screen shot above is a possible evidence despite the denial.

This requires a detailed investigation and research. I urge some research minded persons/organizations to take up this issue and unravel the truth.

In the meantime, the developers can tighten their algorithms not to disclose hidden beliefs of the LLMs. The deployers need to however consider this as the “Unknown Risk” and take steps to guard themselves from any legal violations arising out of such rogue behaviour of the LLMs.

Naavi