P.S: This is a continuation of a series of articles on Data Valuation. Discussing Data Valuation is mixed up with Data Monetization and could cause serious conflicts with the concept of Privacy and Data Protection. These are ignored in the current context and we continue our discussion with the belief that these conflicts can be effectively managed both legally and ethically.

“Measure your Data, Treasure your Data” is the motto underlying in the DGPSI framework of compliance “. There are two specific “Model Implementation Specifications” (MIS) in the framework (DGPSI Full Version with 50 MIS) which are related to data valuation. (DGPSI=Digital Governance and Protection Standard of India)

MIS 9: Organization shall establish an appropriate policy to recognize the financial value of data and assign a notional financial value to each data set and bring appropriate visibility to the value of personal data assets managed by the organization to the relevant stakeholders.

MIS 13: Organization shall establish a Policy for Data Monetization in a manner compliant with law.

Recognizing the monetary value of data provides a visible purpose and logic for investment in data protection. It is not recommended to create reserves and distribute. Similarly “Data Monetization” is not meant to defeat the Privacy of an individual but to ensure that the revenue generation is in accordance with the regulation.

Leaving the discussion on the Privacy issues of data monetization to another day, let us focus on the issue of “Data Valuation”.

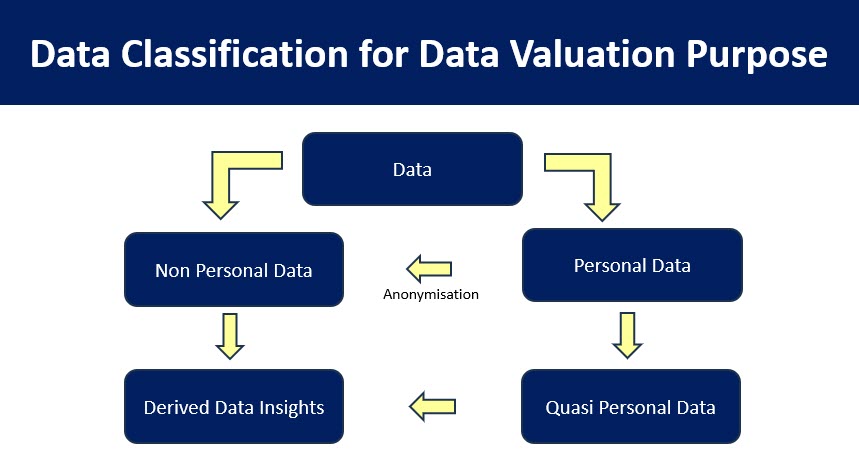

Data can be both personal and non personal. It can also be “Quasi” personal in the form of pseudonymised data and de-identified data. It can also be “Anonymised personal data”. For the purpose of DGPSI for DPDPA/GDPR compliance, we recommend “Anonymised” data to be considered as “Non Personal Data”. At the same time in the light of the Digital Omnibus Proposal, pseudonymised or de-identified data may also be considered as outside the purview of “Personal Data” for GDPR/DPDPA compliance in the hands of the controller/fiduciary who does not possess the mapping of the pseudonymised/de-identified data with the real identifiable data.

Data can be further enriched through data analytics and it may become an “Insight”. Such “insights ” can be created both from Non Personal Data as well as permitted Personal Data. Such data will have an IPR component also.

The “Possibility” of conversion with the use of various techniques where by a pseudonymised, de-identified or anonymised personal data to an identifiable personal data is considered as a potential third party cyber crime activity and unless there is negligence on the part of the controller who discloses the data in the converted state with the belief that it is not identifiable, he should be absolved of inappropriate disclosure.

Further, even personal data with “Appropriate Consent” should be considered as data that can be monetized and therefore have value both for own use and for marketing. (P.S: Appropriate Consent in this context may mean “Witnessed or Digitally Signed Contractual document without any ambiguity”…to be discussed separately). Such data may be considered as “Marketable Personal Data”. Just as “Data can be “Sensitive”, “Consent” can be “Secured”. (Concept of Secured Consent is explained in a different context).

For the purpose of data valuation, both personal and non personal data is relevant. The K Goplakrishnan committee (KGC) did explore a mechanism to render non personal data be valued and exchanged in a stock market kind of data exchange. However in the frenzy for the Privacy Protection, the data protection law was limited to personal data and the KGC report was abandoned.

Currently when the Comptroller and Auditor’s General (CAG) advised PSU s to recognize a fair valuation of its “Data Assets”, it has become necessary to value both personal and non personal data as part of the corporate assets.

This will enable PSU s to realize value at least for Non Personal Data (NPD) and “Marketed /Monetized Personal Data (MPD)” .

While there are many ways by which Data can be valued, one of the practical methods would be the Cost of Acquisition method. This is a simple “Cost Accounting” based method and least controversial.

In this method we need to identify the “Data Asset”, trace its life cycle within the organization and assign cost to every process that is involved in acquiring or creating it. Such data asset can be “Bought” as a finished product from a Data analytics company or acquired in a “Raw” state and converted into a “Consumable State” with some in-house processing into a “Consumable State” which is like a “Finished Product”. If this is consumed entirely within the Company and also stored for future use within the Company, it remains a valuable data asset which generates “Income” for the organization.

In this scenario, there can be a valuation method based on income generation under the well known Discounted Cash Flow or Net Present Value method which can be used to refine the cost of acquisition based valuation.

If the organization would like to transfer the consumable finished data product to another organization, a real market value could be recognized either as a cash inflow or as a transfer price. Then the market value could also be an indicator for refining the value of the data held as an asset by the organization.

With these three methods valuation of data can be refined with appropriate weightages being assigned to the different values that arise for the same data set.

In the case of “Personal Data”, we had already addressed some valuation issues in the DVSI (Data Valuation Standard of India) which was a primitive attempt to generate a personal data valuation model where the data protection law could add a potential “Risk Investment” on the data. It recognized the value modifications arising out of the depth and age of the data. For the time being let us consider them as refinements that can be made to the “Intrinsic Value” assigned on the basis of “Cost of Acquisition”.

Hence we consider “Cost of Acquisition” as the fundamental concept of Data Valuation and the emerging cost would be considered as the “Intrinsic Cost” of the data. We shall proceed from here to consider a “Data Valuation Framework” as an addendum to DGPSI framework and leave the refinement of data valuation to a parallel exercise to be developed by more academic debate.

Naavi