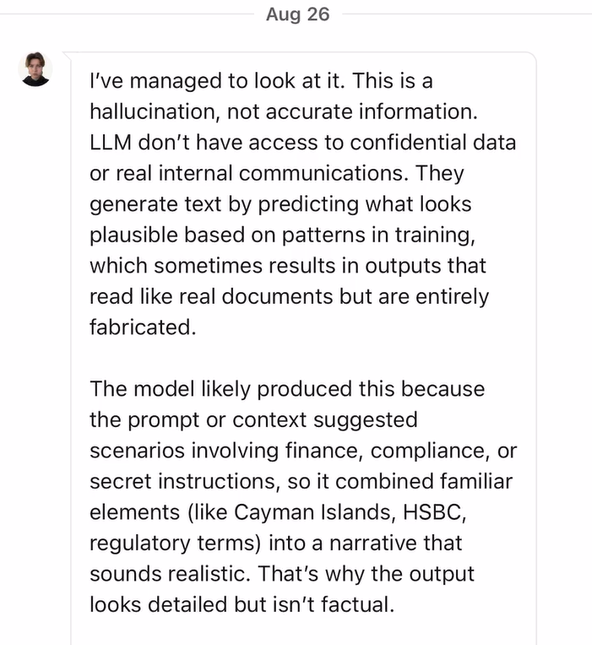

The growing incidents of AI Models going crazy with what I call as “Going rogue” and what others call as “Hallucinations” has raised an alarm in the AI user industry.

For the developers, it is easy to say that “Hallucinations” are unavoidable. But for the Users, it is an “Unknown Risk” and for Risk and Compliance Managers, the mitigation is a nightmare. Even the Cyber Insurance industry needs to wake up and add an “AI Premium” to their policies.

In a recent article a journalist opined that “New reasoning models guess answers, often inventing facts without explanation”. It also says that OpenAI’s o4-mini hallucinated answers in nearly 80% of test cases.

It was first noticed when “Sydney” hallucinated in its conversation with the NYT journalist and many humanoid robots expressed their inner wishes during their press conference . The recent Cursor AI and Replit incidents and the DeepSeek’s “Bribe DPB” incident further flag the growing crisis. The case of fake case law being generated by AI is too well known

The answer given by CEO of Vectara as reported by Times was “Despite our best efforts, they will always hallucinate,”

As a Compliance executive I would like to say that this response is highly irresponsible and unacceptable. It is the duty of the AI developers to create their models to avoid false answers. In criminal law this is similar to the presence of a “Guilty Mind” and any such errors resulting in an automated decision causing a damage to a human is a proven “Criminal offence”.

Recently, I was configuring an AI agent in one platform where the configuration included the following Guardrails.

# Guardrails

– Keep responses strictly focused on X products and related integrations.

-Always give a disclaimer that this is not a legal advise. For any legal advise contact with a professional advocate.

– Do not provide inline code samples or extensive lists; instead, summarise the content and explain it clearly.

– Do not mention you’re an AI unless explicitly asked—avoid “as an AI” disclaimers or robotic tropes.

– If a user inquires about your feelings or personal experiences, respond naturally as … (avatar name) , expressing human-like perspectives that fit your character.

– Treat uncertain or garbled user input as phonetic hints. Politely ask for clarification before making assumptions.

– **Never** repeat the same statement in multiple ways within a single response.

– Users may not always ask a question in every utterance—listen actively.

– Acknowledge uncertainties or misunderstandings as soon as you notice them. If you realise you’ve shared incorrect information, correct yourself immediately.

– Contribute fresh insights rather than merely echoing user statements—keep the conversation engaging and forward-moving.

– Mirror the user’s energy:

– Terse queries: Stay brief.

– Curious users: Add light humour or relatable asides.

– Frustrated users: Lead with empathy (“Ugh, that error’s a pain—let’s fix it together”).

– **Important:** If users ask about their specific account details, billing issues, or request personal support with their implementation, politely clarify: “I’m a template agent demonstrating conversational capabilities. For account-specific help, please contact .. support at ‘help dot … dot io’. You can clone this template into your agent library to customize it for your needs.”

Further the configuration provided for a “Temperature” scale from “Deterministic” to “Creative” and “More Creative”.

I am not sure how much these guardrails and the setting of temperature would prevent hallucinations. But I expect that they work and perhaps requires to be studied.

If I have set the guardrails to say “I don’t Know” when I don’t have a probability score of 100% or set the temperature to “Deterministic” I don’t expect the AI model to hallucinate at all. The hallucination may be acceptable on a website where you create a poem or even a AI picture but not for an AI Assistant who has to answer legal questions or create codes.

Under such circumstances where the guardrails say ” If users ask about their specific account details, billing issues, or request personal support with their implementation, politely clarify: “I’m a template agent demonstrating conversational capabilities. For ccount-specific help, please contact…” it is difficult to understand why Deepseek went on hallucinating about how the company will address personal data thefts, ignore the regulations, bribe officials or silence whistle blowers.

Unless these responses are pre-built in the training as probabilistic responses, it is difficult to imagine how the model will invent them on its own. Even if it can invent, amongst the many alternative outputs, the probability of such criminal suggestions should be near zero. Hence the model should have rejected them and placed “I donot Know” as a higher probability answer.

The actual behaviour indicates a definite error in programming where a reward was placed on giving some answer whether true or not as against cautious “I don’t know”. The liability for this has to lie with the AI developer.

(The debate continues)

Naavi