FDPPI has published a framework of compliance of DPDPA in the AI environment titled DGPSI-AI. This is a framework which extends the basic DGPSI framework meant for DPDPA compliance taking into account the increased risk when a Data Fiduciary is exposed to the risk of AI. The basic objective of DGPSI-AI is to ensure that risks of possible DPDPA non compliance when an AI driven software is used for processing of personal data is adequately mitigated.

AI Risk is basically “Unknown” and “Unpredictable”. If we consider the various instances of AI hallucination in recent days, it appears that the developers of AI models have either not configured them properly or AI is inherently not amenable to elimination of hallucination risk.

The safety measures that one can take to mitigate what are what are referred to as “Guardrails” and are embedded into the system to modify the behaviour.

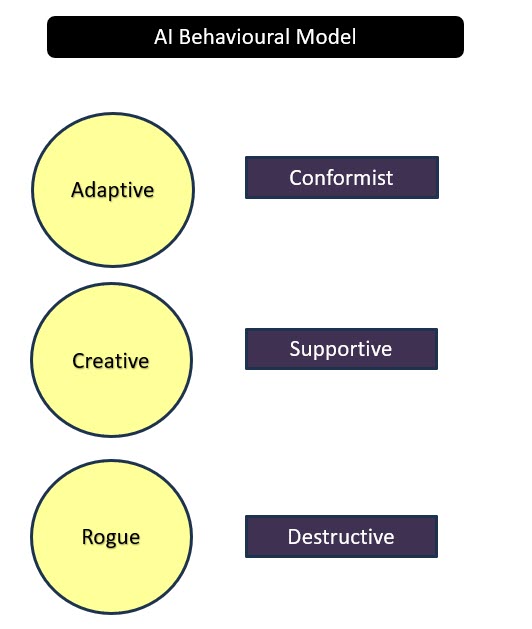

In our previous article, we categorized AI from its behavioural perspective to three types namely adaptive, Creative and Rogue. Each of these behavioural traits could mean that the risk management measures to be taken by a deployer needs to be different for each of these behavioural traits.

In our previous article, we categorized AI from its behavioural perspective to three types namely adaptive, Creative and Rogue. Each of these behavioural traits could mean that the risk management measures to be taken by a deployer needs to be different for each of these behavioural traits.

These are the behavioural expressions in the usage context irrespective of whether the AI was created as an Ethical, Responsible, Transparent and Accountable model and takes into account the risk that an AI may behave in a manner not necessarily how it was meant to work like.

Obviously, a user and perhaps even the developer would not like the “Rogue” behaviour. But the other two modes “Adaptive” and “Creative” are two types which are both useful in different contexts and perhaps should be configurable.

Guardrails are to be created initially by the developer and if he embeds some open source LLM, should take care that guardrails created by LLM developer also are preserved and enhanced.

What we need to further discuss now is whether the responsibility for guardrails rests only with the Developer but also extends to the deployer and the end user.

What we mean by “Adaptive” in this context is a “Deterministic” behaviour where the AI strictly responds within a defined pre-trained data environment or a defined operational data environment. Such AI can be placed under a strict leash with the supervision of a human so that it can be adapted to the compliance requirements without the risk of its hallucinating instincts to deviate from the pre determined behavioural settings.

If the pre-training of a Generative AI model is based on a training data different from the user data environment, then there is a possibility that the AI model may still exhibit “Bias” and could therefore still be considered as “Unreliable”. The developer may place his own guardrails including using AI outputs under strict human oversight so that no output is directly exposed to an external customer. In such cases all risks of inappropriate outputs are absorbed by the human supervisor who authorizes the release of the output to the public.

In DGPSI-AI it is mandatory for every AI deployer (also AI Developer) to designate an accountable “Human Handler” along with an “Explainability Statement”.

In such a context the AI is used in the traditional format of a software tool used by humans and the “Unpredictable” risk becomes an “Absorbed Risk” of the Deployer.

However, it is still possible that the AI is used in an Agentic AI form or with prompting from a user while it is being invoked.

In the case of the Agentic AI mode, the definition of AI agent and the workflow includes the human instructions and hence the person who configured the Agent should bear the responsibility and accountability for its behaviour. If there is any guardrail for the Agent, then it should be part of the Agentic AI functional definitions.

On the other hand, if we are using an AI with prompting at each instance, the responsibility to ensure that available guardrails are not bypassed or existing guardrails are reinforced lies with the user who is prompting the model. The responsibility for guardrails is therefore with the end user of the model.

The summary of these discussions is that “Guardrails” are not the sole responsibility of the developer but is also the responsibility of the deployer, creator of the Agentic AI (who may be part of the deployer) and the end user.

Similarly the Kill switch is the responsibility of the developer but should not be overridden by the deployer or the end user in his prompts. This is not only an issue of “Ethical use” but also the responsibility of designing of the Kill switch.

DGPSI-AI expects that apart from the deployer taking the responsibility for implementing his own guardrails, the developer should configure the Kill Switch in such a manner that it cannot be overridden by prompt. Ideally the Kill Switch should be configured to be an independent component and not accessible to the AI itself and incorporate a self destruction capability in case of an attempt to override the kill switch (or a mandatory guardrail) is recognized.

These are the expectations of the DGPSI-AI on behalf the Compliance auditor in the interest of the data principal whose personal data is processed by an AI. I would welcome the views of the technology experts on this matter.

Naavi