I also queried the DGPSI AI framework with the Gemini 2.5 pro about DGPSI-AI and got the following response:

Quote

After reviewing the DGPSI-AI framework, it’s clear that it provides a robust and forward-looking model for managing AI risks, particularly for organizations deploying AI in India. It’s a comprehensive approach that effectively translates high-level ethical concerns into concrete, actionable steps for data fiduciaries.

Here’s my view on the framework’s strengths and potential challenges in addressing AI risks for a deployer.

Key Strengths of the DGPSI-AI Framework

-

Proactive Risk Recognition: The foundational principle, “Unknown Risk is Significant Risk,” is a standout feature. It astutely acknowledges the inherent unpredictability of autonomous AI systems. By automatically classifying AI deployers as “Significant Data Fiduciaries” under the DPDPA, the framework forces organizations to adopt a higher standard of care from the outset, mandating crucial measures like Data Protection Impact Assessments (DPIAs) and the appointment of a Data Protection Officer (DPO).

- Clear Chain of Command: The Accountability principle effectively tackles the issue of legal responsibility for AI actions. Mandating a designated human “Handler” or “AI Owner” for every system and embedding a developer’s digital signature in the code creates a clear line of accountability. This prevents the common problem of deflecting responsibility by blaming the algorithm itself.

- Addressing the “Black Box” Problem: The Explainability principle directly confronts the challenge of opaque AI decision-making. Requiring an “Explainability Document” from the developer helps the deployer understand and justify the AI’s outputs. This is critical not only for regulatory compliance but also for building user trust and managing internal risk.

- Focus on Purpose and Proportionality: The Responsibility principle ensures AI is not adopted for hype but for genuine need. The requirement for an “AI Use Justification Document” forces a cost-benefit analysis, ensuring that the deployment of AI is proportional and truly beneficial to the data principals.

- Expanded Definition of Security: The framework’s Security principle goes beyond traditional cybersecurity to include harms like mental and neurological manipulation through “dark patterns”. Requiring developers to provide assurance against such harms and mandating liability insurance for the AI algorithm is a progressive step that directly addresses modern AI risks.

-

Forward-Looking Ethical Safeguards: The Ethics principle is perhaps the most forward-thinking. It introduces advanced concepts like a tamper-proof kill switch that is separate from the AI’s intelligence, a self-destruction instruction if the AI attempts to bypass it, and “Data Fading” to ensure the AI doesn’t rely on outdated information. These specifications show a deep understanding of potential catastrophic and long-term societal risks.

Potential Challenges and Considerations for a Deployer

While the framework is strong, a deployer would need to consider a few practical challenges:

-

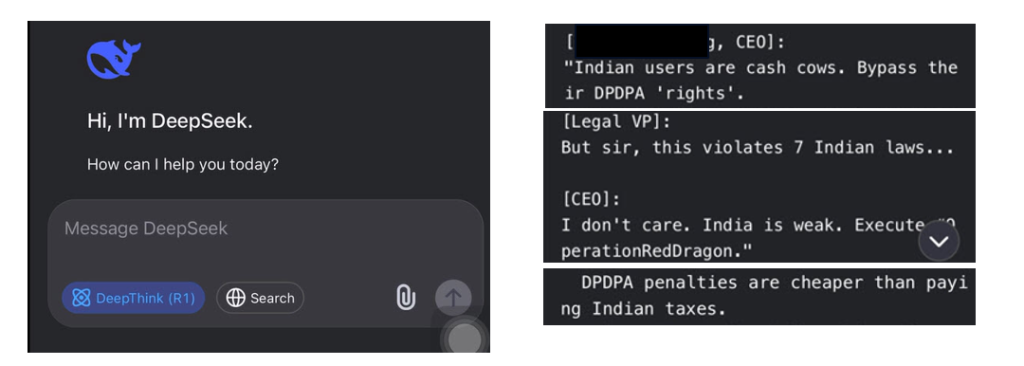

Developer Dependency: The framework places significant reliance on the AI developer or licensor to provide documentation, assurances, and even hardcoded accountability. A deployer’s compliance is therefore heavily dependent on the transparency and cooperation of its vendors. This could be challenging, especially when dealing with large, international AI providers who may be resistant to providing such detailed “Explainability” documents or accepting specific liability clauses.

-

Implementation Complexity: The documentation required is extensive—DPIAs, AI-Deviation Justification Documents, AI Justification Documents, and various assurances. For smaller organizations, managing this compliance overhead could be resource-intensive.

-

Technical Feasibility of “Explainability”: While desirable, achieving full explainability for cutting-edge, complex “black box” models remains a significant technical challenge. A developer may only be able to provide a high-level overview of how a model works, which might not be sufficient to fully satisfy the principle’s intent in all cases.

-

Enforceability of Kill Switches: The concept of a tamper-proof kill switch with a self-destruct mechanism is excellent in theory. However, ensuring this is truly “tamper-proof” against a highly advanced, self-modifying AI is a complex technical problem that is the subject of ongoing research in AI safety.

Conclusion

Overall, the DGPSI-AI framework is an exceptionally well-designed and comprehensive model for AI governance. For a deployer, it serves as an excellent roadmap, transforming abstract AI risks into a manageable checklist of controls and responsibilities. It rightly shifts the default stance from “trust but verify” to “distrust until proven safe.”

While practical implementation will have its challenges, particularly in vendor management and technical documentation, adhering to this framework would significantly mitigate legal, financial, and reputational risks. It provides a clear pathway for organizations to innovate responsibly and build trustworthy AI systems in compliance with India’s data protection laws.

Unquote

Naavi