In the last few days since the release of the India AI Guidelines some comments have been expressed in the social media which require my reaction.

The Committee inter-alia made the following statements all of which is welcome and laudatory.

Adopt voluntary frameworks to promote responsible innovation and mitigate risks.

Develop draft guidelines, codes, standards, respective evaluation metrics and testing frameworks in collaboration with relevant agencies and sectoral regulators.

Clarify how different entities in the AI value chain (for example, developers, deployers, end-users) are governed under existing regulations, such as the IT Act.

Impose obligations for each of these entities that are proportionate to their function and the risk of harm (for example, transparency reporting, content removal, grievance redressal, transparency, and legal assistance).

Ensure laws are complied with through timely and consistent enforcement.

Develop accountability mechanisms that would support voluntary compliance to mitigate harm (for example, self-certifications, peer monitoring, third party audits).

rule of law is paramount and that enforcement strategies should focus on prevention of harm while allowing space for responsible innovation

Develop a risk assessment and classification framework that is customised for India’s local context, and accounts for risks to vulnerable groups.

Encourage the adoption of voluntary frameworks to mitigate risks through principles, commitments, standards, audits, and appropriate incentives.

Guide the development and deployment of AI systems that are transparent, fair, open, non-discriminatory, explainable, and secure by design.

Require human oversight and other safeguards to mitigate loss of control risks especially in sensitive sectors involving critical infrastructure.

The Committee believes that voluntary measures can serve as an important layer of risk mitigation in India’s AI governance framework. While not legally binding, they support norms development, create accountability, and inform future regulatory choices.

When it was politely pointed out that these recommendations can be found in practice in India through the DGPSI-AI framework and that the committee does not seem to have taken note of the same, some ultra enthusiastic persons commented

1. How can people demand government to include something in their reports ?

2. Can I demand Govt. to include my own IEEE paper on AI in their report ?

3. Is DGPSI-AI framework an industry recognized standard like ISO/STQC?

4. What is the background of this framework authors ? Are they in AI development or having any international level recognition in AI field?

5. Is referring such novel non-standard frameworks not a bias ?

6. Is it correct to lobby for inclusion of a private framework?

I take it as my responsibility to answer to all these questions. I am doing it below for the attention of everybody and more particularly Mr Kumar P of Chennai who has raised these queries.

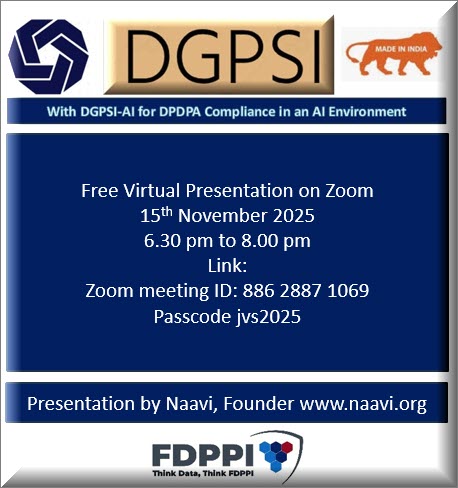

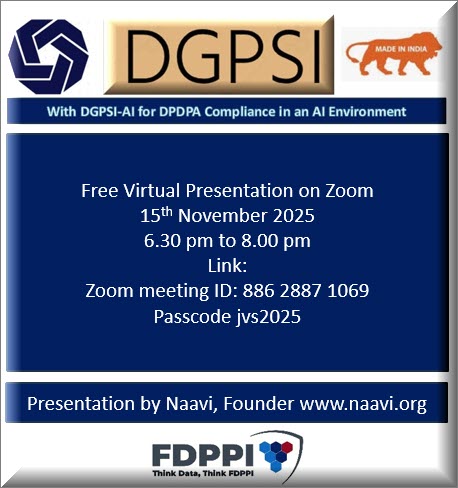

I also want to take this opportunity to introduce DGPSI and DGPSI-AI once again to the professionals through an open session today through Zoom and invite all interested persons and more particularly the members of the India AI Guidelines drafting committee chaired by Professor Balaraman Ravindran, IIT, Madras.

The link to the program is here:

Now to answer the questions of Mr Kumar and others who may be thinking like him, I give my views because it is considered my duty to clarify any misconceptions.

Clarifications on the Charge of Lobbying :

-

- Naavi is not known to lobbying. I have a professional history in Cyber Law and Data Protection since 1998 when the first draft of E Commerce Act 1998 was published. Since then there are many occasions when I have been critical of the Government, supportive of the Government and even worked in some committees of the Government as a member. My approach has always been on specific issues without any personal agenda and no lobbying intention can be seen in my activities. Ideally Mr Kumar has to withdraw his statement and apologize. But I am not unduly concerned.

- There have been two occasions when I have been aggressively critical with MeitY once in 2011 related to the announcement “ISO 27001 compliance certificate is a deemed compliance of Section 43A rules” and then again recently in opposition of the attempted take over of the domain dpdpa.in through NIXI.

- There have been other occasions when I have been critical of the Government policies including on Crypto Currencies and have also been critical of some Supreme Court judgements including Section 66A judgement, Crypto Currency judgement and others. Often I have fought to defend MeitY and its policies. particularly on the Intermediary guidelines more than the department itself.

- These are not the actions of a person who intends to lobby with the Government

Why Express disappointment on the Committee Report

The disappointment was not on the recommendations of the committee since all the recommendations relevant to a private sector are already been under implementation by Naavi.

However it was noted that the Committee acknowledged international AI laws and frameworks, ISO frameworks, some work in progress for development of framework by NASSCOM and finally made a list of references including blog articles related to the field. While all this was fine, it was conspicuous that there was no mention of DGPSI or DGPSI-AI on which plenty of information is available in public space.

DGPSI is much more than an IEEE paper and is a work which itself is worthy as a recognition of a doctoral thesis. The recognition by the industry is left to the professionals in the industry who should evaluate the framework without putting blinkers on that “only a foreign framework is good for India”. This is a colonial mindset which I personally reject outright.

Hence the emphasis DGPSI is a “Made in India ” concept and not like the ISO or NIST. It is considered the “Essence of the Essential aspects of global frameworks” and a “Viswa Guru” of such compliance frameworks. It has the capability of being spun into additional frameworks for GDPR compliance or any other 140 plus Data Protection compliance in the global scenario.

I agree that the omission for reference in the report could be out of innocent ignorance of the facts or the mistake of the official of Meity who failed to place his research on the developments before the committee. However it is unthinkable that the experts in the committee were unaware of the developments and did not consider it worthy of acknowledgement without in the least agreeing to any of the contents of the work.

Hence I presume rightfully there was some ulterior motive and it was my duty to point it out since this is a committee of the Government.

What is DGPSI? DGPSI-AI?

Lastly, it was my duty to give another opportunity to the public to understand the framework of DGPSI and DGPSI-AI and though there are published books on the framework, I thought it necessary to give a free workshop to introduce DGPSI and DGPSI-AI. I hope all the sceptical persons attend to the event.

Who Should Comment on the Committee’s Report?

It is in my opinion that it is not mandatory for the framework to be endorsed by ISO to be considered useful for the industry. DGPSI is a “Made in India” framework and we are proud of it. The Committee also should have been proud of it.

In fact DGPSI compares extremely well even with the recent ISO 27701:2025 and I will be part of a training effort on ISO 27701 shortly to educate the professionals through a training program which will teach both ISO27701 and DGPSI simultaneously as a combo offer.

We have already mapped the framework ISO27701:2025 with DGPSI and are satisfied that DGPSI framework covers all requirements of ISO 27701. The reverse may not be true but it is not a critical observation on ISO 27701 because ISO 27701 was not meant for compliance of DPDPA 2023 and hence is not expected to cover all aspects of DGPSI which is meant to cover DPDPA 2023.

If the critics feel that only persons with international recognition in the AI field should comment, I disagree. I am discussing the AI from the deployer’s perspective and it is the right of the consumer of AI to comment on the recommendations of the committee and even oppose the prioritization of innovation over safety of the public.

Questioning the AI qualifications for commenting is like questioning Mr Modi on how without an MA in Political science he is discharging the functions of the Prime Minister of India. Proof of the pudding is in eating. if some body has a qualification in AI issued by some organization, or published some papers, peer reviewed some papers, he does not automatically become an authority on commenting on the impact of AI on a consumer. My comments are related more towards the consumer experience and DGPSI-AI is a framework for the AI deployers who are Data Fiduciaries under DPDPA 2023. I am sure that the AI graduate may not even know the difference between a “Data Fiduciary” and a “Data Processor”.

Making reference to a framework such as DGPSI is a matter of factual development in the history of AI regulation in India and should have been taken into note by the committee and not a “Bias”. On the other hand, suppressing all information on it was “Bias”. Avoiding the mention is like the attempt of Mrs Indira Gandhi who wanted to bury a Capsule of historical facts of India for the future generations to be mislead.

Why Naavi has turned aggressive in this issue?

The reason why Naavi is being aggressive on this issue is that it is believed that if this is not questioned, the probability of the follow up guideline from the Ministry could be that “A Certificate of ISO 27701 compliance is considered a deemed compliance of DPDPA.” A similar notification had been made by DIT in 2011 stating that “ISO 27001 certificate is a deemed compliance of section 43A of ITA 2000”.😍

This 2011 endorsement by DIT was extensively used for marketing of ISO 27001 by the ISO organization quoting it on their websites. (A research of Naavi.org would provide the necessary information). The DIT was forced to clarify in an RTI application that the intention was not to declare ISO 27001 as deemed compliance but it was only an example. (Check Naavi.org for more on this if interested)

I am highlighting these comments here since I will not be alluding to these controversies during my evening conference where I will focus only on explaining DGPSI and DGPSI AI to the extent the time permits.

Apologies in case I hurt any sentiments of any body through the above clarifications.

Naavi