(Continued from the previous article)

P.S: This series of articles is an attempt to place some issues before the Government of India which promises to bring a new Data Protection Law that is futuristic, comprehensive and Perfect.

In our previous article, we discussed the definition of Privacy. One of the new concepts we tried to bring out is that “Sharing” should be recognized only when identified personal data is made accessible to a human being.

In other words, if personally identified data is visible to an algorithm and not a human, it is not considered as sharing of identified data if after the processing of personal data by the algorithm, the identity is killed within the algorithm and the output contains only anonymised information.

Typically such a situation arises when a CCTV captures a video. Obviously the video captures the face of a person and therefore captures a critical personal data. However, if the algorithm does not have access to a data base of faces to which the captured picture is compared with and identified, the captured picture is only an “Orphan Data” which has an “Identity parameter” but is not “Identifiable”. The output which let us say a report of how many people passed a particular point as captured by the camera etc is devoid of the identity and is therefore not a personal information.

The algorithm may have an AI element where the captured data is compared to a data base of known criminals and if there is any match, the data is escalated to a human being where as if there is no match, it is discarded. In such a case the discarded information does not constitute personal data access while the smaller set of identified data passed onto human attention alone constitutes “Data Access” or “Data Sharing”.

Further, the definition provided yesterday used some strange looking explanation of “Sharing” as

“..making the information available to another human being in such form that it can be experienced by the receiver through any of the senses of seeing, hearing, touching, smelling or tasting of a human..”

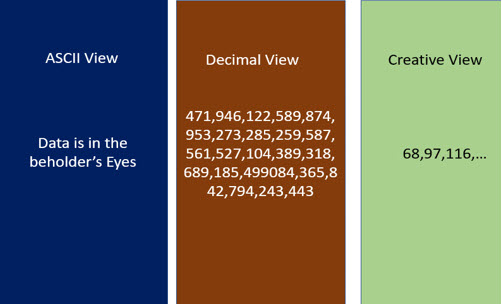

This goes with my proposition that “Data is in the beholder’s eyes” and “Data” is “Data” only when a human being is able to perceive it through his senses.

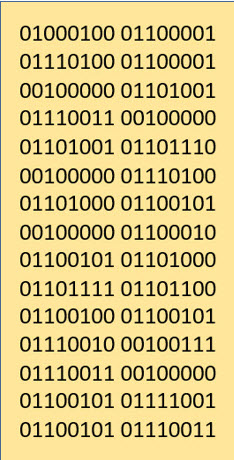

For example,  let us see the adjoining document which represents a binary stream.

let us see the adjoining document which represents a binary stream.

A normal human being cannot make any meaning out of this binary expression. If it is accessed by a human being therefore, it is “Un-identifiable” information.

A computing device may however be able to make a meaning out of this.

For example, if the device uses a binary to ascii converter, it will read the binary stream as ” Data is in the beholder’s eyes”. Alternatively, if the device uses a binary to decimal converter, it could be read as a huge number. If the  AI decides to consider each set separated by a space as a separate readable binary stream, it will read this as a series of numbers.

AI decides to consider each set separated by a space as a separate readable binary stream, it will read this as a series of numbers.

Similarly if the binary stream was a name, the human cannot “Experience” it as a name because he is not a binary reader. Hence the determination whether a binary stream is “Simple data” or “a Name” or a “Number” etc is determined by the human being to whom it becomes visible. In this context we are calling the sentence in English or number in decimal form as “visibility”. If the reader is an illiterate, even the converted information may be considered as “Not identifiable”. At the same time if the person receiving the information is a “Binary expert who can visualize the binary values”, he may be a computer in himself and consider the information as “Identifiable”.

It is for these reasons that in Naavi’s Theory of Data, one of the hypothesis is that “Data is in the beholder’s eyes”.

The “Experience” in this case is “Readability” through the sensory perception of “Sight”. Similar “Experience” can be recognized if the data can be converted into a “Sound” though an appropriate processing and output device. Theoretically it can also be converted into a sense of touch, smell and taste if there are appropriate devices to convert them into such forms.

If there is a “Neuro input device” associated, then the binary stream can be directly input into the human brain by a thought and it can be perceived as either a sentence or number as the person decides.

These thoughts have been incorporated in the definition of “Privacy” and “Sharing” which was briefly put out in the previous article.

The thought is definitely beyond the “GDPR limits” and requires some deep thinking before the scope of the definition can be understood.

In summary, the thought process is

If an AI algorithm can be designed that identifiable data is processed in such a manner that identity is killed within the algorithm, then there is no privacy concern. In fact a normal “Anonymizing” algorithm will be one such device which takes in identifiable information and spits out anonymous information. In this school of thought, such processing does not require consent and does not constitute viewing of identifiable data even by the owner of the algorithm (as long as there is no admin over ride)

I request all of you to read this article and the previous article once again and send me a feedback.

P.S: These discussions are presently for a debate and is a work in progress awaiting more inputs for further refinement. It is understood that the Government may already have a draft and may completely ignore all these recommendations. However, it is considered that these suggestions will assist in the development of “Jurisprudence” in the field of Data Governance in India and hence these discussions will continue until the Government releases its own version for further debate. Other professionals who are interested in participating in this exercise and particularly the Research and Academic organizations are invited to participate. Since this exercise is too complex to institutionalize, it is being presented at this stage as only the thoughts of Naavi. Views expressed here may be considered as personal views of Naavi and not that of FDPPI or any other organization that Naavi may be associated with.

Naavi

I am adding a reply to one of the comments received on Linked In:

Question:

Consider the situation of google processing your personal data from cookies or server and providing you specific ad. Google claims this automatic processing and output is anonymous.

So your suggestion to allow this?

Answer